Programming Collective Intelligence chapter 10 notes

Published: 2014-05-22

Tagged: python readings guide

Chapter 10 of Programming Collective Intelligence adds another tool to our arsenal of unsupervised learning techniques: non-negative matrix factorization. The example used in the book illustrates the application of this method really well: extracting themes from a collection of news articles using the words from each article. The Book also contrasts this with the previous unsupervised learning tool we went over: clustering. Some articles can't be clustered since they're unique. As with support vector machines from chapter 9, NMF is a more complex method and I won't cover the math behind them here - I'll stick to The Book.

You can find the code to follow along here.

Diving In

The name of the chapter hints at what we'll learn: "Finding Independent Features". This bears resemblance to chapter 3 - clustering methods with one main difference: you can't cluster that which is unique.

The Book first illustrates why any of our previous approaches won't work too well this time. We first start with using a Naive Bayes' classifier:

import newsfeatures

all_w, art_w, art_t = newsfeatures.get_article_words()

word_mat, word_vec = newsfeatures.make_matrix(all_w, art_w)

import docclass

word_matrix_features = lambda x: [word_vec[w] for w in xrange(len(x)) if x[w] > 0]

classifier = docclass.NaiveBayes(word_matrix_features)

classifier.set_db('news.db')

# We train the classifier item by item...

art_t[0]

#[Out]# u"Nigeria Leader: Boko Haram Is 'Al-Qaida Operation'"

classifier.train(word_mat[0], 'terrorism')

art_t[1]

#[Out]# u'You for Sale : Never Forgetting a Face'

classifier.train(word_mat[1], 'technology')

art_t[2]

#[Out]# u'N.A.A.C.P. Selects Cornell Brooks as New President and CEO'

classifier.train(word_mat[2], 'politics')

art_t[3]

#[Out]# u'Death Toll in Turkish Mine Disaster Hits 299 as New Fire Breaks Out'

classifier.train(word_mat[3], 'disaster')

art_t[4]

#[Out]# u'Air Crash In Laos Kills Top Officials For Security'

classifier.train(word_mat[4], 'disaster')

art_t[5]

#[Out]# u'G.M. Is Fined Over Safety and Called a Lawbreaker'

classifier.train(word_mat[5], 'technology')

art_t[6]

#[Out]# u'Judge Orders U.S. to Stop Force-Feeding Syrian Held at Guant\xe1namo'

classifier.train(word_mat[6], 'politics')

And now we use it to classify:

classifier.classify(word_mat[4])

#[Out]# 'disaster'

classifier.classify(word_mat[6])

#[Out]# 'politics'

classifier.classify(word_mat[2])

#[Out]# 'politics'

classifier.classify(word_mat[1])

#[Out]# 'politics'

classifier.classify(word_mat[3])

#[Out]# 'disaster'

classifier.classify(word_mat[5])

#[Out]# 'politics'

Right now it should be obvious why this approach isn't satisfactory - we need a labeled dataset with fairly few labels. Imagine having to process a dataset with a 100 thousand items and 5000 labels. Shudders.

Next up we're urged to try a hierarchical cluster:

# continueing from previous example, so the data is already loaded and crunched

import clusters

clust = clusters.h_cluster(word_mat)

clust = clusters.hcluster(word_mat)

clusters.draw_dendrogram(clust, art_t, jpeg='news.jpg')

clusters.draw_dendrogram(clust, [i.encode('utf8') for i in art_t], jpeg='news.jpg')

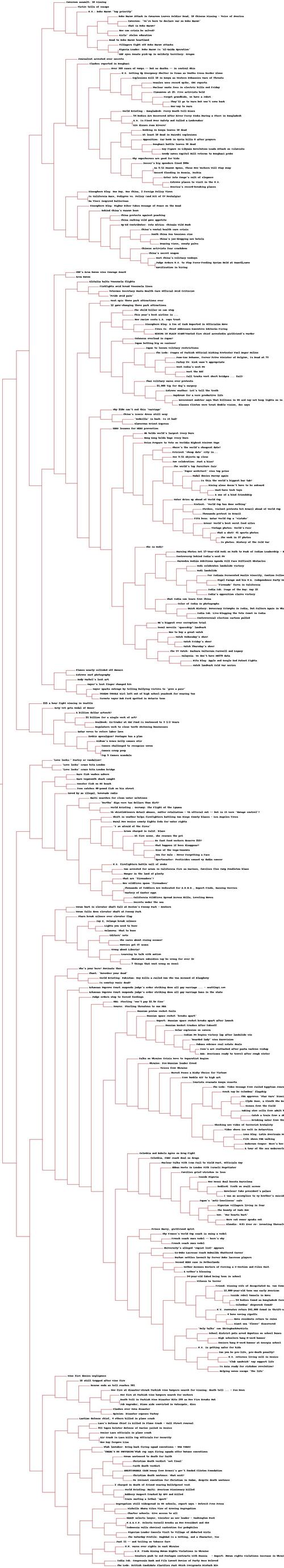

Let's see this beauty (warning: 875kb, 1200x6620 image):

Well, it's somewhat better. Inspecting a few parts of the image we see some pretty good points like "Rare fish washes ashore" and "Rare megamouth shark caught". Then we notice pearls like "Do fast-food workers deserve 15$?" and "What happens if bees disappear". We need something better. Something that would label the data for us. We could then use this data to train a Naive Bayes' classifier. Or something equally as cool.

NMF

Here's where non-negative matrix factorization comes in. As I mentioned before, I won't go into what happens behind the scenes (I don't know yet!) but it involves some linear algebra and, as The Book points out, it's a relatively fresh algorithm from the late 90's.

We'll run our script against two targets. First, a list of rss feeds. Second, some stock data.

The first case will yield two output files: articles.txt and features.txt. The first file will juxtapose rss feed entry titles with groups of features and their weights. The second file will do the opposite - list groups of features and 3 titles and their weights. The second case will combine dates and stock volume changes to expose a few dates when something most likely happened.

Let's run NMF on the rss data. This might take a minute or two, based on your machine:

import newsfeatures

import nmf

import numpy as np

all_w, art_w, art_t = newsfeatures.get_article_words()

word_matrix, word_vec = newsfeatures.make_matrix(all_w, art_w)

import numpy as np

v = np.matrix(word_matrix)

weights, feat, mask = nmf.factorize(v, pc=20, iter=50)

# filter down article titles to match weights ie.

# get rid of titles that have no corresponding weights

art_t2 = np.array(art_t)[mask][0]

top_patterns, pattern_names = newsfeatures.show_features(weights, feat, art_t2, word_vec)

newsfeatures.show_articles(art_t2, top_patterns, pattern_names)

Here's an example from the article.txt file:

North Korea Admits to ‘Serious’ Building Collapse

9.66553278026 ['north', 'korea', 'building', 'pyongyang', 'collapse', 'feared']

2.51229475091 ['world', 'that', 'were', 'this', 'where', 'year']

1.14301745343 ['russian', 'rocket', 'space', 'said', 'friday', 'state']

And here's an example from the features.txt file:

['publisher', 'details', 'times', 'that', 'abramson', 'jill']

(29.23946405297897, u'NYT publisher gives details on firing')

(25.79123588123705, u'After Criticism, Times Publisher Details Decision to Oust Top Editor')

(8.5860086262257198, u'KURTZ: NY Times publisher hits back at Abramson- Turmoil at the top: Why the NYT dumped Jill Abramson')

Let's run NMF on the stock volume data. Luckily the only thing you have to do is execute python stockvolume.py > output.txt, which will fetch a few stock tickers from Yahoo's collection of financial data and apply NMF on it. The script outputs everything to stdout and it's comfortable to redirect this into a file. I annotated the code and hopefully it's clear enough to understand. Let's checkout the output:

Feature 1:

(25061208.097289961, 'YHOO')

(2105308.306952598, 'XOM')

(1469905.365130445, 'PG')

(1401068.61334226, 'AMGN')

(588878.39728755818, 'CVX')

(572258.86160004011, 'DNA')

(471578.254558824, 'BIIB')

(451073.9739113843, 'BP')

(403089.9284251529, 'AVP')

(302140.10453782894, 'CL')

(24056.051438565159, 'GOOG')

(22787.08435232949, 'EXPE')

[(6.7406247840208708, '2006-07-19'), (3.9385522599761766, '2006-01-18'), (3.9183811984287447, '2006-09-19')]

The nameless Feature 1 is strongly tied into with YHOO on 2006-07-19. A quick search of "Yahoo stocks 2006 07 19" leads us an article titled "Weak Sales of Text Ads at Yahoo; Shares Dip". Looks like we got our culprit.

Conclusion

The NMF algorithm presented here is much more of a black box as compared to k-nearest neighbors or Naive Bayes' classifier. However, the seemingly magical information it yields would be well worth going a bit more in depth. I recreated part of the first task (the rss feeds example) using scikit-learn in scikit_nmf.py, but I haven't been able to recreate the ability to link concrete articles from a dataset with a list of features. As far as I got, it should be possible to tie in the data from vectorizer.get_feature_names() with nmf.components_. Definitely putting this on my todo list.

Comments